It’s always the same. You do a great piece of research and then someone comes along and says, “Ah, but how do you know that’s what the data is telling you? Can we trust the data?”. Very often of course the person asking the question does so with the best intentions, although not necessarily with a full understanding of the work that’s just been done. It could be anything from an experiment run for support of a claim, to a piece of exploratory research looking at a new measurement device. Irrespective of what the experiment was, as scientists we still need to be confident that we are actually measuring what we think we are. As the basest of levels, we need to be sure our devices we use are telling us what we think they are, and we also need to be able to show others that this is the case to convince them of our arguments. This can be as simple as ensuring our equipment is calibrated – is what the equipment is telling us correct?

Calibration is a funny thing. I’ve known people calibrate rulers to ensure that what they are measuring is correct. And then recalibrate them after a year, just in case magically they have changed. It is also a often misunderstood or even misused term. For instance I was once asked whether a camera I was using in a clinical study was calibrated. When I asked “Calibrated for what?”, as the question was not specific enough, I was greeted with a quizzical look and the person just asked exactly the same question again, as if stuck in a loop. This is an example of where calibration can fall down, an assumption that if something is calibrated it must automatically be trusted – “of course this is calibrated, and therefore anything it tells you is right”. This is a very dangerous situation to be in in science – the device passed the calibration check therefore everything is fine. It is therefore important to define what it has been calibrated for, and to define limits beyond which that calibration is no longer valid.

A case in point recently, I was working on designing and building a UV imaging system. One thing I wanted to know was whether I could tell how much UV was being reflected from the sample, just from the photograph. So in essence, was there a correlation between the greyness of the sample and the amount of UV being reflected? Sounds simple enough, just get some commercial grey calibration standards for photography and image them under UV and then that’s it. $100, job done. However, it isn’t job done as the commercial visible light photography standards, don’t reflect UV in the same manner they reflect visible light. They absorb much more UV than visible light due to what has been used to make them. Not only do they absorb more UV light than visible light, they vary quite dramatically in absorbance between 400nm and 300nm. This change in behaviour means that they are not suitable as UVA reflection standards. So, the simple (cheap) option isn’t going too work, what next. You can buy grey standards which are optically neutral, i.e. they reflect the same amount of light between 200nm and 2000nm. Great, I’ll get a set of those. This is where you find out that a set of 8 of these standards, with different reflectances cost about $6000 if you want to buy them. Slightly beyond most peoples budgets, especially for the casual researcher, and beyond my own budget for an upstream research project. Also I needed a number of standards in the 5% to 30% reflection region as this was where my samples were reflecting, so I would need custom ones making which bumped up the cost astronomically.

As it happens I was able to find a set of 8 of these wideband calibration standards on eBay for a small fraction of the original cost, enabling me to build more confidence in the validity of my work, and further assess the quality and behaviour of my own custom made ones (shown below). Always keep an eye on eBay for science kit no one needs anymore…..

In the absence of a commercially available standard within the available budget, where do we go now? The standard visible light photography grey cards are cheap but not suitable, the commercial ones far too expensive for own use. At this stage I go back to the literature. Surely, I’m not the first person to need these, and surely enough, I’m not. In work going back about 50 years, people had been using magnesium oxide (white), carbon black (black) and plaster of paris in different ratios to make grey standards suitable for UV imaging. The problem was, as is often the case even in published articles, the methods for making them and assessing their usefulness were so nebulous as to be useful only as a guide. I took the principles and guidance from the papers, invested the princely sum of $10 in the raw materials and set about making my own. After a few attempts of trial and error I made a set of samples which were much more optically neutral, i.e. not showing huge changes in reflectance, in the UV region. In effect I had made something better than the commercial visible light photography standards ($100), but not as good as the commercial lab grade spectroscopy standards ($6000+). I checked mine using a UV-Vis spectrometer, to see whether they would be suitable. The end result is that for $10 and a little time, I was able to make something which to have made would have been inordinately high cost, and was ‘good enough’ for what I needed. The ‘good enough’ also needs to be kept in mind when considering calibration. What is good enough – you don’t need 10 decimal places if you want to measure +/- 1% for instance.

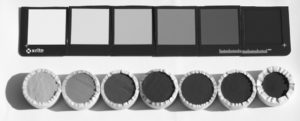

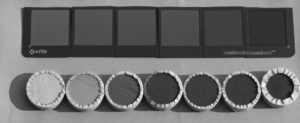

The difference between the standard photographic grey tiles, and my custom ones can been seen below. Firstly with a visible light photograph, and secondly with a photo taken in UV only (both using sunlight, and my multispectrum imaging system, with a UV/IR cut filter, and Baader U UV filter for the 2 images). The standard photographic grey standards are the squares at the top, and my custom ones the circles underneath.

Visible light image.

UV image.

The exposures have been chosen such that my custom standards have similar grey scale scores in the visible and UV region (reflecting the UV-Vis spectrometer data). This also highlights how much more UV the normal photographic grey standard tiles absorb compared to visible light, and why they are not suitable for UV imaging calibration.

Going through what’s necessary to ensure that our work is actually telling us what we think it is, can be almost as difficult and time consuming as the work itself. It is often costly, in either or both money and time, and can sometimes require items and standards which may not even exist and have to be developed specifically for the task at hand. But what is the price for not doing this? Lack of certainty, lack of credibility, reliance on faith over fact (in itself no bad thing, but can make getting to the bottom of things in science problematic). Are these really prices worth paying in science? In reality, this piece could just as easily have been entitled “The cost of not calibrating”, as what price do we put on the credibility of our work?